Introduction

With new emerging technologies, the world is about to change. New technologies such as artificial intelligence and autonomy in computer systems will most likely revolutionise living in multiple ways. This development in technology also has a military interest because it signifies a shift in modern warfare. The ongoing militarisation of artificial intelligence and autonomy in computer systems is described as the third revolution in warfare after gunpowder and nuclear weapons (Future of Life 2015). Thus, there are significant advantages to consider when approaching the technologies.

Nevertheless, concerns exist already regarding whether the technology will accommodate core principles in international humanitarian law. Hence, this paper asks; what are the possible implications of implementing autonomous weapons in contemporary warfare? The main argument is that the current concerns if autonomous weapon systems can accommodate international humanitarian law, are profoundly relevant and worrying. Nonetheless, utilising autonomous weapons systems are not fundamentally more challenging than non-autonomous weapon systems in specific circumstances.

This paper commences with clarifying autonomous weapon systems and their dependency – sophisticated artificial intelligence. After that, examine the possible implications of implementing autonomous weapon systems on contemporary battlefields regarding core principles in international humanitarian law. Lastly, conclude that there are both advantages and drawbacks regarding utilising autonomous weapon systems on the battlefields. However, it is challenging to conclude on this matter yet. Therefore, the paper recommends that these concerns could be mitigated by establishing awareness amongst future political and military decision-makers.

Defining autonomous weapon systems

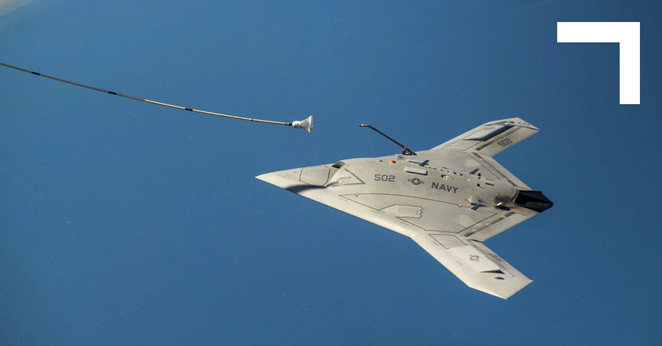

In this paper, autonomy is narrowed to a technological understanding that acts inside computer systems in weapon systems. In this regard, the autonomous system in a computer processes the information its sensors perceive, which makes the use of armed force feasible. In other words, autonomy is about what tasks or functions are being performed autonomously in the computer system. Therefore, it is challenging to categorise autonomous weapon systems and separate them from non-autonomous weapon systems because they do not differ in appearance, such as an unmanned aerial vehicle from an autonomous aerial vehicle. However, an explicit distinction of autonomy in a weapon system is if the weapon system can select, identify, and engage targets without human interaction (Tamburrini 2016:122). A weapon system is autonomous if the autonomy functions are combined to operate a weapon with the intent to engage selected targets if activated as intended (Hellström 2012:5). An illustrative example is a land-based vehicle armed with a computational system to operate, for instance, a machine gun, a cannon, or a rocket system, which can decide to engage targets it identifies by itself. Therefore, autonomy in weapon systems is about how the weapon systems holistically identify, select, and engage targets without human interaction.

Artificial intelligence

For this to become feasible, artificial intelligence is essential. Artificial intelligence is imperative because it gives an autonomous system the capacity to be adequately intelligent to perform assignments previously reserved for humans (Scharre 2018:5). Artificial intelligence is vital because an advanced form of intelligence enables the system to understand the intention with a mission and reach the objectives through its own decisions without being pre-programmed (Ibid:32).

The way artificial intelligence is constructed and functions, permit the plausibility of autonomy in weapon systems. The systems’ learning algorithms enable adaptation, through trial and error, in the environment the system is in (Suchman and Weber 2016:87f.). The autonomous system registers its environment through its sensors, optimises and verifies the information, and then calculates the best way of action (Cummings 2018:8). With the help of pre-defined operational parameters decided by humans, the weapon system can identify, select, and engage targets from what the artificial intelligence deems appropriate (Haas and Fischer 2017:286). Claiming this does not imply that autonomy today makes it attainable for autonomous systems to write their algorithms. However, with the advancements in deep learning, increased sophisticated forms of artificial intelligence in the future might make it achievable to learn from experiences. Thereby, the consequences of this make it plausible for the system to write its algorithms (Scharre 2018:86f.). A clear and consistent distinction for autonomy regarding the latter is that autonomous systems will not generate a corresponding behaviour as automatic and automated systems but instead produce different behaviours and become unpredictable (Cummings 2018:8). In other words, autonomy in systems is sophisticated enough that the intern cognitive processes are less understandable for the user, enabling unpredictable decisions. The user might comprehend what kind of tasks the autonomous system will solve but not how it will solve them.

Relationship between human operators and autonomous weapon systems

The third category of distinctions of autonomous weapon systems is the relationship between the human operators and the systems. To identify, select, and engage targets on their own, autonomous weapon systems depend on the degree of freedom from human interactions. Semi-autonomous weapon systems can select and adapt to targets. However, they cannot engage without human acceptance, which indicates that humans possess complete decision-making power and are in the loop in the decision-making process (Scharre 2018:29). Supervised autonomous weapon systems differ from semi-autonomous weapon systems by engaging targets without direct human acceptance. However, staying in the loop, human operators can intervene in the decision-making process and interrupt the weapon system from engaging targets (Ibid:45). These weapon systems exist today, and are primarily utilised where the speed of engagement can overwhelm human operators. The American-made Phalanx Close-in Weapon System and the Norwegian-made Naval Strike Missile are relevant examples of supervised autonomous weapon systems. Finally, fully autonomous weapon systems can identify, select, and engage targets without human acceptance at all, and humans are outside of the loop in the decision-making process (Scharre 2018:46). The possibility of human interaction is restricted, which provides the weapon system with a high level of independence (Ibid:30). Claiming this does not imply that a fully autonomous weapon system has total control over itself. To operate with a high degree of independence necessitates sophisticated artificial intelligence that enables functioning without human interaction.

This potential depends on how system autonomy and fully autonomous weapon systems are defined and conceptualised. However, having the battlefield roaring with autonomous weapon systems which are near to being fully autonomous is no longer an imagination or prediction of future potentialities. Claims are emerging, stating that a supposed aerial vehicle autonomously targeted and engaged human combatants in 2020 in the Libyan civil war (Cramer 2021). Whether these claims are valid does not matter regarding the existent risks and concerns when approaching autonomous weapon technology today.

The potential implication of the use of autonomous weapons concerning international humanitarian law

When considering utilising weapon systems for engagements, central principles of international humanitarian law must be acknowledged and respected by human decision-makers. These principles are found in the four Geneva Conventions of the 12th of August 1949 and in the two Additional Protocols of the 10th of June 1977. This paper will examine three core principles that can be challenging for autonomous weapon systems to accommodate. These principles are the principle of distinction, the principle of proportionality, and the principle of superfluous injury or unnecessary suffering.

The principle of distinction

The principle of distinction originates primarily in articles 48, 51(2) and 52(2) in the first additional protocol of 1977 of the Geneva Conventions. The principle establishes what is deemed legitimate and non-legitimate targets in armed conflicts. Non-legitimate targets are civilian individuals and objects. It is not permitted to engage these intentionally. However, non-legitimate targets can be understood as legitimate targets if they are directly involved in the acts of struggle (Protocol I 1977:264ff.).

One of the most considerable challenges concerning utilising autonomous weapon systems is if the systems can recognise combatants change of status to horse de combat – from legitimate to non-legitimate targets (article 41, Protocol I 1977:259f.). A status change like this occurs when a combatant becomes non-offensive, for example, due to surrender or unconsciousness. Due to autonomous weapon systems are not utilised significantly today, fully autonomous weapon systems at least, relevant data from the battlefield, does not exist. The scarcity of relevant data fuels the uncertainty if these systems can distinguish targets change status to non-legitimate targets. Today, autonomous weapon systems do not possess a high degree of interpretive ability due to the absence of sophisticated artificial intelligence. Therefore, scepticism exists if the weapon systems can perceive their environments satisfactorily, resulting in a significant probability of violating the principle of distinction. A similar argument, like this one, was used when arguing for the ban of anti-personnel mines back in the late 1990s.

The principle of proportionality

The principle of proportionality originates in articles 35(2) and 57(2) in the first additional protocol of 1977 of the Geneva Conventions. The principle refers to engagements expected to create excessive or unintentional harm on non-legitimate targets and demand that the damage caused is not disproportionate to the military advantage. Therefore, the principle of proportionality prohibits engagements where the incidental loss exceeds the military advantage (Protocol I 1997:258,269).

Today, it is challenging to comprehend if autonomous weapon systems can calculate proportionately when engaging targets on the battlefield. Challenging it is because of the unknowns regarding the sophistication of today’s existing artificial intelligence. This uncertainty is also relevant in the foreseeable future because of the concerns regarding the technological advancement of artificial intelligence. Noel Sharkey argues: “[…] there is no sensing or computational capability that would allow a robot such a determination, and nor is there any known metric to objectively measure needless, superfluous or disproportionate suffering” (Sharkey 2008:88). Today, weapon systems with the intelligence equivalent to humans that enable selecting and identifying targets correspondingly as human soldiers are considered hypothetical.

The principles of distinction and proportionality are not fundamentally more challenging regarding autonomous weapon systems than non-autonomous weapon systems. Autonomous weapon systems may be more precise in the target selection than humans due to the absence of reasoning and feelings – they possess a mathematical-based logic instead of a feeling-based logic. In addition, this could likewise be an opposing ethical argument against this point.

The principle of superfluous injury or unnecessary suffering

The option to designate methods and means to engage targets, which results in minor damage on non-legitimate targets without sacrificing the military advantage, appears to be the main controversy regarding the debate about the implication of utilising autonomous weapon systems. The principle of superfluous injury or unnecessary suffering, also interpreted as a prohibition, originates in article 35(2) in the first additional protocol of 1977 of the Geneva Conventions. The principle of military necessity, article 51 and 57 (Protocol I 1977: 265f.;269), elaborates the principle of superfluous injury or unnecessary suffering. The articles establish criteria for which methods and means that applies to achieve a military advantage.

The principle of superfluous injury or unnecessary suffering does not necessarily restrain the use of autonomous weapon systems. Today, there is inadequate data to conclude whether autonomous weapon systems will be vulnerable to violating the principle of avoiding superfluous or unnecessary suffering. By obtaining sophisticated identification- and targeting capacities that surpass human cognitive abilities, autonomous weapon systems on the battlefields can help decrease the number of deaths and injured (Ronald Arkin, in Tamburrini 2016:124). Incidental loss becomes more unproblematic to accept if the functions that assist the weapon system engage targets are not predictable. The use of autonomous weapon systems can be comparable to the utilisation of artillery, for example, the use of self-propelled howitzers. When deploying artillery to engage targets, there is a probability that some shells can land outside the target area. However, a situation like this will not violate the principles in the international humanitarian law, as long the incidental loss does not exceed the military advantage.

Military decision-makers should consider the probability that autonomous weapon systems can violate international humanitarian law to a higher degree than human soldiers can. However, the deployment of autonomous weapon systems on the battlefield is not necessarily juridically or ethically challenging. For example, this could be in defensive situations, such as missile defence, or offensive situations on battlefields where non-legitimate targets are not present. Several of the contemporary battlefields are in urban areas. Therefore, non-legitimate targets will most likely always be present. Hence, the challenges are also significant considering implementing autonomous weapons as an offensive capacity than defensive capacity. The last point to highlight is that a more sophisticated artificial intelligence than what exists today is fundamental if autonomous weapon systems could accommodate the principles of international humanitarian law. Artificial intelligence can allow autonomous weapon systems to conduct operations where speed and precision are critical, with an increased probability for success than with the use of humans (Horowitz 2018).

Conclusion

Whether the implications of the use of autonomous weapon systems contain more drawbacks than advantages remain to see. Essential is that decision-makers who will deploy this technology are familiar with its limitations regarding the principles of international humanitarian law and the dependency on sophisticated artificial intelligence. With this, this paper has endeavoured to identify possible implications regarding the use of autonomous weapon systems, which might, in turn, violate core principles of international humanitarian law. It is challenging to conclude if autonomous weapon systems disrupt these core principles at this very moment, bearing in mind that autonomous weapon systems are not used to a significant degree today. At least, this is accurate concerning fully autonomous weapons systems, which is non-existent - this is a question about definitions and conceptualisations.

Nevertheless, is it not premature to stress the urgency that political and military decision-makers to be concerned about the risks of violating international humanitarian law when implementing autonomous weapons on the battlefield. Employing these weapon systems without any concerns would be irresponsible and unethical. However, establishing awareness of the likelihood that autonomous weapon systems can violate international humanitarian law might mitigate this from occurring to a significant degree. However, this can only realise itself by the political and military decision-making knowledge about these distinct issues.

Succeeding securing awareness, as aforementioned, an answer to human control and accountability remains unsettled. Especially securing sufficient human control over autonomous weapon systems, despite whether the principles of international humanitarian law will survive a high-technological war between great powers, is believed to be in most states’ interests.

Cover photo: Northrop Grumman

References

Cramer, Maria, 2021: ‘A.I. Drone Maye Have Acted on Its Own in Attacking Fighters, U.N. Says’, nytimes.com, 4thof June <https://www.nytimes.com/2021/06/03/world/africa/libya-drone.html> [Last accessed: 09.10.2021]

Cummings, Missy, 2018: ‘Artificial Intelligence and the Future of Warfare’ p. 7-18 in Marry Cummings, Heather Roff, Kenneth Cukier, Jacob Parakilas and Hanna Bryce Artificial Intelligence and International Affairs: Disruption Anticipated, (Chatman House Report), London: Chatman House The Royal Institute of International Affairs.

Future of Life Institute, 2015: ‘Lethal Autonomous Weapons Systems’, futureoflife.org <https://futureoflife.org/lethal-autonomous-weapons-systems/> [Last accessed: 11.10.2021]

Haas, Michael and Sophie-Charlotte Fisher, 2017: 'The evolution of targeted killing practices: Autonomous weapons, future conflict, and the international order', Contemporary Security Policy, vol. 38(2): 281-306.

Hellström, Thomas, 2012: ‘On the Moral Responsibility of Military Robots’, Ethics and Information Technology: 1-16 (authors copy) <https://people.cs.umu.se/thomash/reports/ETIN2012.pdf> [Last accessed: 10.10.21]

Horowitz, Michael, 2018: 'The promise and peril of military applications of artificial intelligence, thebulletin.org, the 23rd of April <https://thebulletin.org/2018/04/the-promise-and-peril-of-military-applications-of-artificial-intelligence/> [Last accessed: 11.10.21]

Protocol I, 1977: 'Protocol Additional to The Geneva Conventions of the 12th of August 1949, and Relating to The Protection of Victims of International Armed Conflicts (Protocol I), of the 8th of June 1977’, ihl-databases.icrc.org, <https://ihl-databases.icrc.org/applic/ihl/ihl.nsf/INTRO/470> [Last accessed: 10.10.2021]

Scharre, Paul, 2018: Army of None: Autonomous Weapons and the Future of War, NY: W.W. Norton & Company.

Sharkey, Noel, 2008: ‘Grounds for Discrimination: Autonomous Robot Weapons’, RUSI Defence Systems Vol. 11(2): 86-89.

Suchman, Lucy og Jutta Weber, 2016: ‘Human-machine autonomies’ p. 75-102 in Nehal Bhuta, Susanne Beck, Robin Geiß, Hin-Yan Liu og Claus kreß (eds.) Autonomous Weapons Systems: Law, Ethics, Policy, Cambridge: Cambridge University Press.

Tamburrini, Guglielmo, 2016: ‘On banning autonomous weapons systems: from deontological to wide consequentialist reasons’ p. 122-141 in Nehal Bhuta, Susanne Beck, Robin Geiß, Hin-Yan Liu and Claus kreß (eds.) Autonomous Weapons Systems: Law, Ethics, Policy, Cambridge: Cambridge University Press.